2013-08-10 Agda by Example: λ-calculus

Update: I gave a talk at NY Haskell following this blogpost, although I didn’t manage to talk about everything:

In the previous Agda example we saw how we can approach the task of verifying a sorting function. This time, we are going to write a type checker for the simply typed λ-calculus, plus a simple optimization on said terms that we will prove correct. As in the other post the bulk of the thinking has been done by other people. The type checking is a modified version of an example given in The View from the Left by Conor McBride and James McKinna,1 while the optimisation is inspired from a talk given by Adam Chlipala at POPL 2013—his Coq book Certified Programming with Dependent Types contains many similar examples.

Let’s get started.

Useful imports #

module Lambda where

open import Data.Nat using (ℕ; zero; suc; _+_; _≤?_; _≥_)

open import Data.Fin using (Fin; zero; suc; toℕ)

open import Data.List using (List; []; _∷_; length)

open import Data.Vec using (Vec; []; _∷_; lookup)

open import Relation.Binary.PropositionalEquality using (_≡_; refl; cong; cong₂)

open import Relation.Nullary using (Dec; yes; no)

open import Function using (_∘_; _$_)

open import Data.Product

After the module declaration, we include some useful modules from the Agda standard library:

-

Data.Natdefines natural numbers. -

Data.Findefines an inductive families to represent the type of all numbers less than a given number. For instanceFin 3will be inhabited by0,1, and2. Another interpretation is thatFin nis the type inhabited bynelements. -

Data.List, predictably, defines finite lists. -

Data.Vecdefines lists indexed by their length. This allows, for example, for safe indexing of elements. -

Relation.Binary.PropositionalEqualitydefines propositional equality as presented in the previous post.cong₂is the two-argument version ofcong. -

Relation.Nullarydefines a type for decidable relations,Dec:data Dec (P : Set) : Set where yes : ( p : P) → Dec P no : (¬p : ¬ P) → Dec P -

Functionexports some common utilities regarding functions that should be familiar to the Haskell programmer, such as function composition (∘) and explicit application ($).

Simple types and raw terms #

The language we are going to define is a simple simply typed λ-calculus. The types for our language will be of two kinds: natural numbers and functions (arrow types):

infixr 30 _⇒_

data Type : Set where

nat : Type

_⇒_ : Type → Type → Type

We will use de Bruijn indices to represent bound variables, where n represents the variable bound by the n-th λ going towards the top of the term. For example, using an Haskell notation for λs, we will have that

| Named | de Bruijn |

|---|---|

λx → x

|

λ 1

|

λx → λy → x

|

λ λ 2

|

λx → λy → λz → x z (y z)

|

λ λ λ 3 1 (2 1)

|

and so on. de Bruijn notation is a terrible device for humans to use directly, but it is often more convenient when dealing with terms mechanically. When parsing a language it is easy to go from names to nameless, so that we can have the best of both worlds.

Thus we will have:

infixl 80 _·_

data Syntax : Set where

-- A variable, the ℕ being a de Bruijn index.

var : ℕ → Syntax

-- A number literal.

lit : ℕ → Syntax

-- Addition.

_⊕_ : Syntax → Syntax → Syntax

-- Function application.

_·_ : Syntax → Syntax → Syntax

-- Lambda abstraction, the type indicates the type of the argument: no

-- type inference here.

lam : Type → Syntax → Syntax

Typed terms, and type checking #

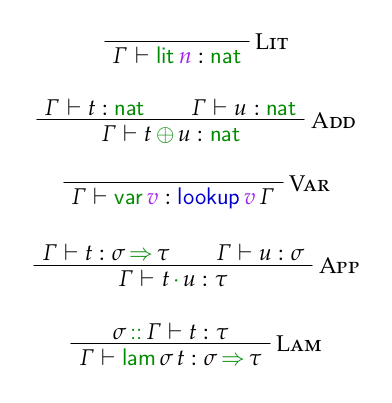

Our λ-calculus has a very unsurprising type system. Typing judgements will be done in a context, holding the types of the abstracted variables. Calling the context Γ, we can give typing judgements for our syntax:

For those unfamiliar with typing rules like the ones above, they can be read as “given what’s above the bar, what’s below holds”. In our case we have judgements of the form Γ ⊢ t : τ, which can be read as “In context Γ, term t has type τ”.

Lit says that number literals have type nat. Add says that given two terms of type nat, we can add them together yielding another term of type nat. Var says that given a variable we can get its type by looking it up in the context—we haven’t defined what a context is but we will soon. App says that given a function of type τ ⇒ σ we can apply it to a term of type τ to get a term of type σ. Finally, if we have a term t : τ in a context with σ “at its head”, we can form a function of type σ ⇒ τ, typed in the context with σ removed (Lam).

Now, taking advantage of Agda’s facilities, we can define a Term data type to represent terms together with their type—a Term will essentially include a derivation that its type is valid.

We will represent the context as a Vec containing Types. The fact that Vec carry their length at the type is useful, since it will indicate how many variables are in scope, and thus what the largest de Bruijn index is.

Ctx : ℕ → Set

Ctx = Vec Type

A type checked Term will be parametrised over a context Γ of some length n. Moreover, it will be indexed by its type. The type is an index because different constructors will be typed differently, just like in the typing derivations.

data Term {n} (Γ : Ctx n) : Type → Set where

var : ∀ {τ} (v : Fin n) → τ ≡ lookup v Γ → Term Γ τ

lit : ℕ → Term Γ nat

_⊕_ : Term Γ nat → Term Γ nat → Term Γ nat

_·_ : ∀ {σ τ} → Term Γ (σ ⇒ τ) → Term Γ σ → Term Γ τ

lam : ∀ σ {τ} → Term (σ ∷ Γ) τ → Term Γ (σ ⇒ τ)

Note that in the var case we could have written

var : (v : Fin n) → Term Γ (lookup v Γ)

However this would have caused problems further down the line,2 and thus we quantify over a type τ and use an explicit equality.

Also in var, we use Fin to make sure that the index is indeed “in scope”. If the context is of length n, an index of type Fin n makes sure that we can look up the type of the variable, since Fin n represents the type of all naturals less than n:

lookup : ∀ {n} {A : Set} → Fin n → Vec A n → AClosed terms will be those living in empty contexts:

Closed : Type → Set

Closed = Term []

For example, we can write a function doubling numbers in Term. The type will predictably be nat ⇒ nat:

double : Closed (nat ⇒ nat)

double = lam nat (var zero refl ⊕ var zero refl)

We can also write a function that forgets the type information and gets us Syntax from Term:

erase : ∀ {n} {Γ : Ctx n} {τ} → Term Γ τ → Syntax

erase (var v _) = var (toℕ v)

erase (lit n) = lit n

erase (t ⊕ u) = erase t ⊕ erase u

erase (t · u) = erase t · erase u

erase (lam σ t) = lam σ (erase t)

Type equality #

Before writing our type checking function, we need some additional tools. We will begin by defining a decision procedure for equality between types. First two lemmas telling us that if two arrow types are equal, both sides of the arrows are equal too:

≡⇒₁ : ∀ {σ σ′ τ τ′} → σ ⇒ τ ≡ σ′ ⇒ τ′ → σ ≡ σ′

≡⇒₁ refl = refl

≡⇒₂ : ∀ {σ σ′ τ τ′} → σ ⇒ τ ≡ σ′ ⇒ τ′ → τ ≡ τ′

≡⇒₂ refl = refl

Then the actual function. Dec is inhabited by either evidence that the relation holds, using the constructor yes; or that it doesn’t, using no:

_≟_ : (τ σ : Type) → Dec (τ ≡ σ)

nat ≟ nat = yes refl

nat ≟ _ ⇒ _ = no λ()

_ ⇒ _ ≟ nat = no λ()

σ ⇒ τ ≟ σ′ ⇒ τ′ with σ ≟ σ′ | τ ≟ τ′

σ ⇒ τ ≟ .σ ⇒ .τ | yes refl | yes refl = yes refl

σ ⇒ τ ≟ σ′ ⇒ τ′ | no σ≢σ′ | _ = no (σ≢σ′ ∘ ≡⇒₁)

σ ⇒ τ ≟ σ′ ⇒ τ′ | _ | no τ≢τ′ = no (τ≢τ′ ∘ ≡⇒₂)

Note that in the cases where different constructors are present we use λ(), which is a shorthand for a function with an empty pattern—meaning that its input cannot be inhabited. For example when faced with nat ≟ σ ⇒ τ to prove the inequality we need to show that ¬ (nat ≡ σ ⇒ τ), which is a shorthand for (nat ≡ σ ⇒ τ) → ⊥—“We can derive falsity given that nat is equal to an arrow type.” But given how ≡ is defined Agda knows that its indices must be the same, and thus that nat ≡ σ ⇒ τ cannot be inhabited, allowing us to use an empty pattern.

Moreover, note how we use dependent pattern matching when recursing down the structure of the types: when pattern matching on an equality proof in a yes using refl the two relevant types are constrained to be the same using dotted patterns, which allows us to use refl again to prove equality of the type as a whole.

From Fin to ℕ #

The second tool that we will use is a “view” to tell us whether a given ℕ “fits” in a Fin n.

data Fromℕ (n : ℕ) : ℕ → Set where

yes : (m : Fin n) → Fromℕ n (toℕ m)

no : (m : ℕ) → Fromℕ n (n + m)

Fromℕ is parametrised over the “upper bound” n, and indexed over the ℕ that we are bringing into Fin n. We can then write a function that tries to convert a number m to a Fin n:

fromℕ : ∀ n m → Fromℕ n m

fromℕ zero m = no m

fromℕ (suc n) zero = yes zero

fromℕ (suc n) (suc m) with fromℕ n m

fromℕ (suc n) (suc .(toℕ m)) | yes m = yes (suc m)

fromℕ (suc n) (suc .(n + m)) | no m = no m

Type checking #

Finally we can proceed to the actual type checking procedure. As with Fromℕ, we define a data type indexed over what will be the input of our function—some piece of untyped Syntax:

data Check {n} (Γ : Ctx n) : Syntax → Set where

yes : (τ : Type) (t : Term Γ τ) → Check Γ (erase t)

no : {e : Syntax} → Check Γ e

Check is parametrised over contexts. If type checking succeeds, we return a type τ and a term t : τ, the erasure of t being the Syntax we were type checking—the yes constructor. We can fail at any time using the constructor no.3

Our type checking procedure will work with a context and some Syntax:

check : ∀ {n} (Γ : Ctx n) (t : Syntax) → Check Γ t

With var, we make sure that the index is in scope using fromℕ. If it is, the type is determined by what’s in the context at the right index:

check {n} Γ (var v) with fromℕ n v

check {n} Γ (var .(toℕ v)) | yes v = yes (lookup v Γ) (var v refl)

check {n} Γ (var .(n + m)) | no m = no

Literals are of type nat:

check Γ (lit n) = yes nat (lit n)

For additions, we recursively type check the two sides. If they are both nat, the resulting term is nat too. Otherwise, type checking fails:

check Γ (t ⊕ u) with check Γ t | check Γ u

check Γ (.(erase t) ⊕ .(erase u)) | yes nat t | yes nat u = yes nat (t ⊕ u)

check Γ (_ ⊕ _) | _ | _ = no

Note that when checking against the results of the recursive calls, we use dotted matches to show the relationship between the input Syntax terms and the type checked terms: the former is the erasure of the latter. This way we can invoke yes so that the result will be well typed.

The interactive Agda mode is tremendously useful in these situations. If we are in the situation

check Γ (t ⊕ u) with check Γ t | check Γ u

check Γ (t ⊕ u) | p1 | p2 = {!!}

We can pattern match by placing p1 in the hole and then invoking C-c C-c, which will result in

check Γ (.(erase t) ⊕ u) | yes τ t | p2 = {!!}

Agda automatically places the dotted patterns. Then after doing the same for p2 and matching nat, we are left with

check Γ (.(erase t) ⊕ .(erase u)) | yes nat t | yes nat u = {!!}The goal for that hole, as Agda reminds us, is

Check Γ (erase t ⊕ erase u)

We can type yes and then C-r to refine the hole, and then C-a in each hole to tell Agda to try to search for an appropriate term, which in this case succeeds. In general with strong enough types we only write an outline of the function and then a lot of details can be filled in automatically.

For what concerns application, we check that the term we are applying something to has a function type, and that the argument’s type is equal to the domain of said function:

check Γ (t · u) with check Γ t | check Γ u

check Γ (.(erase t) · .(erase u)) | yes (σ ⇒ τ) t | yes σ′ u with σ ≟ σ′

check Γ (.(erase t) · .(erase u)) | yes (σ ⇒ τ) t | yes .σ u | yes refl = yes τ (t · u)

check Γ (.(erase t) · .(erase u)) | yes (σ ⇒ τ) t | yes σ′ u | no _ = no

check Γ (t · u) | _ | _ = no

Finally, with λs, we check that the body of the function is well typed in the context plus the type of the argument. If that’s the case, the resulting type is an arrow type:

check Γ (lam σ t) with check (σ ∷ Γ) t

check Γ (lam σ .(erase t)) | yes τ t = yes (σ ⇒ τ) (lam σ t)

check Γ (lam σ t) | no = no

Type checking the syntax for double will give good results:

> C-c C-n check [] (lam nat (var 0 ⊕ var 0))

yes (nat ⇒ nat) (lam nat (var zero refl ⊕ var zero refl))While ill-typed examples will fail:

> C-c C-n check [] (lam nat (var 0 · var 0))

no

> C-c C-n check [] (lam (nat ⇒ nat) (var 0 ⊕ var 0))

noEmbedding terms #

Now that we have a type checker, we can embed typed terms into Agda. This technique is a common and cheap way to give semantics to programming languages that we are modelling inside a theorem prover—we borrow the host language semantics.

In our case, we can easily define a function taking Type into Agda’s Set:

⟦_⟧ : Type → Set

⟦ nat ⟧ = ℕ

⟦ σ ⇒ τ ⟧ = ⟦ σ ⟧ → ⟦ τ ⟧

Then, to transport an arbitrary Term Γ τ to ⟦ τ ⟧, we need an environment storing values for the bound variables:

infixr 5 _∷_

data Env : ∀ {n} → Ctx n → Set where

[] : Env []

_∷_ : ∀ {n} {Γ : Ctx n} {τ} → ⟦ τ ⟧ → Env Γ → Env (τ ∷ Γ)

Env Γ stores values corresponding to the types stored in Γ. We can extend an existing Env by consing a value of the right type to it, and extending the context.

We can also lookup values into the Env just like we can index into Vecs:

lookupEnv : ∀ {n} {Γ : Ctx n} (m : Fin n) → Env Γ → ⟦ lookup m Γ ⟧

lookupEnv zero (x ∷ _) = x

lookupEnv (suc n) (_ ∷ env) = lookupEnv n env

With these tools, we can define a function evaluating a Term into an Agda value of the right type:

_[_] : ∀ {n} {Γ : Ctx n} {τ} → Env Γ → Term Γ τ → ⟦ τ ⟧

env [ var v refl ] = lookupEnv v env

env [ lit n ] = n

env [ t ⊕ u ] = env [ t ] + env [ u ]

env [ t · u ] = (env [ t ]) (env [ u ])

env [ lam _ t ] = λ x → (x ∷ env) [ t ]

Now we can verify, for example, that double does the right thing:

double′ : ⟦ nat ⇒ nat ⟧

double′ = [] [ double ] -- A closed term, in an empty environment

doubleTest : double′ 3 ≡ 6

doubleTest = refl

Constant folding #

To conclude, we will write a simple optimisation our terms, and prove that it is sound by showing that it always preserves the semantics of the original term, using the embedding above.

To represent “optimised” terms, we will use a record parametrised over the original term and holding the optimised version, plus evidence that for all environments evaluating the original and optimised term will yield the same Agda value:

record Optimised {n} {Γ : Ctx n} {σ} (t : Term Γ σ) : Set where

constructor opt

field

optimised : Term Γ σ

sound : ∀ {env} → env [ t ] ≡ env [ optimised ]

However, to succeed, we need to postulate an axiom:

postulate ext : ∀ {A B : Set} {f g : A → B} → ({x : A} → f x ≡ g x) → f ≡ g

This axiom says that if we have two functions f and g, and we have evidence that for all inputs the functions have equal outputs, then the functions themselves can be considered equal. This is usually know as functional extensionality, and we cannot prove it in Agda and theorem provers based on similar theories.4 Postulating extensionality is still (hopefully) consistent with Agda’s theory, although obviously we will not be able to compute with it—definitions pattern matching on equality proofs will get stuck on invocations of ext.

With this axiom and our record Optimised, we will write the optimisation and the proof at the same time. The optimisation will consist of reducing constant expressions to number literals, so that for example

lit 3 ⊕ lit 7will be reduced to

lit 10So, our function will take a term and produce an optimised version of it:

cfold : ∀ {n} {Γ : Ctx n} {τ} (t : Term Γ τ) → Optimised t

Variables and literals stay untouched, so the proof of equality will be trivial:

cfold (var v p) = opt (var v p) refl

cfold (lit x) = opt (lit x) refl

For applications, we call cfold recursively, and then we use cong₂ to combine the resulting proofs of soundness—since evaluating functions means applying them, we use _$_:

cong₂ : {A B C : Set} (f : A → B → C) {x y u v} → x ≡ y → u ≡ v → f x u ≡ f y v

_$_ : ∀ {A : Set} {B : A → Set} → ((x : A) → B x) → ((x : A) → B x)

cong₂ _$_ : {A B : Set} {f g : A → B} {x y : A} → f ≡ g → x ≡ y → (f $ x) ≡ (g $ y)

cfold (t · u) with cfold t | cfold u

... | opt t′ p | opt u′ q = opt (t′ · u′) (cong₂ _$_ p q)

For λs, we need to use ext to prove that the optimised function is equal to the original one:

cfold (lam σ t) with cfold t

... | opt t′ p = opt (lam σ t′) (ext p)

Finally, with additions, we perform the actual optimisation. If both sides after optimisation are number literals we can replace them with another literal. Otherwise we leave the addition in place. We also combine the proofs of equality of the sides with cong₂ +, given that the denotation uses +.5

cfold (t ⊕ u) with cfold t | cfold u

... | opt (lit n) p | opt (lit m) q = opt (lit (n + m)) (cong₂ _+_ p q)

... | opt t′ p | opt u′ q = opt (t′ ⊕ u′) (cong₂ _+_ p q)

And that’s it.

You can comment this post on reddit.

-

See also the Agda version by Ulf Norell in his Dependently Typed Programming in Agda tutorial, after which some of the data types for this tutorial are patterned after.↩︎

-

See the footnote ad the end of the section about constant folding.↩︎

-

Notice that the

noconstructor does not include evidence that the term is ill-typed. This could be fixed by having a data type representing ill-typed terms.Precise types are usually a good idea, not only because they describe the program better to humans, but also because machines can do more with it. For example, when using

AgsywithCheck,badis always going to be a valid candidate, even if the term we are checking is well typed.Here we have chosen a simpler way for brevity, especially since the “error” data type would be fairly boring.↩︎

-

It has been an ongoing challenge to find a workable theory where functional extensionality holds—a recent proposal is observational equality.

With “workable” we mean among other things a theory where type checking is decidable, something that we lose if for example we “solve” the problem in a naïve way by adding a rule to derive definitional equality (the meta-judgement in the type checker for term equality) from propositional equality.

More broadly the theme of equality is a very debated one in type theory, with one related development getting a lot of people excited lately.↩︎

-

If we had

varto havelookup v Γas an index, we would have been unable to pattern match on the optimised term here, since Agda would have got stuck trying to decide if there should be a case forvar.↩︎